It has been a minute since I’ve posted here about my Blender adventures – there was a long pause after Day 11 of a never-completed 50-day “make something everyday” practice. The truth is, my interests are not singular enough for me to feel satisfaction from doing the same thing everyday – I demand variety and flexibility. To that end, I’ve started documenting a new creative endeavor – VRDreamJournal – with the desire to play with a number of different softwares (including Blender), while thinking in objects, landscapes and architectures. Yes, sometimes the creations are explicitly in VR (either made in or deployed to a headset), but other times, they are a glimpse into a virtual world.

In the latest for this endeavor, I’m picking up the pieces of what I had learned so far in Blender and woooooo, I have forgotten a lot. Good thing I blogged (said someone for the first time ever).

Let’s dive right into what I attempted to do. I’m really interested in adding water or fluid elements into the virtual scenes I’m creating in Blender and there seem to be two ways to go about it (depending on your needs): (1) create a plane with a material to make it look like water, or (2) utilize Blender’s fluid simulation (and yes, with the appropriate materials) to create a volume / mesh that behaves like water.

There might be more ways for those in the know, but this is what I’ve seen so far.

I decided to start with one because it’s really quite accessible (while still giving you a good bit to learn). I began following along with this tutorial here:

I actually didn’t even do the whole tutorial, as I was most focused on the water bit. A few key takeaways for me was the introduction of the Musgrave Texture Node, which seems is a type of fractal noise.

From the website itself, the definition reads:

Musgrave is a type of Fractal Noise. Simple Perlin Noise is generated multiple times with different scaling, and the results are combined in different ways depending on the Musgrave type.

This is quite helpful. Also, what isn’t Perlin noise? Important keywords / parameters to know:

- Dimension: this controls the intensity of the different instances of noise – large values REDUCE the visibility of smaller details.

- Lacunarity: this is the scale of the different instances of noise – scales of each instances are set relative to the previous one. I still need to wrap my head around this one.

Scale and Detail are also quite good, and they are intuitive to what you think they are. How nice.

Importantly, if you switch over to 4D dimensional space, using the W parameter, you can play more holistically with the noise (the definition for this value is as follows: Texture coordinate to evaluate the noise at. I don’t know what that means!).

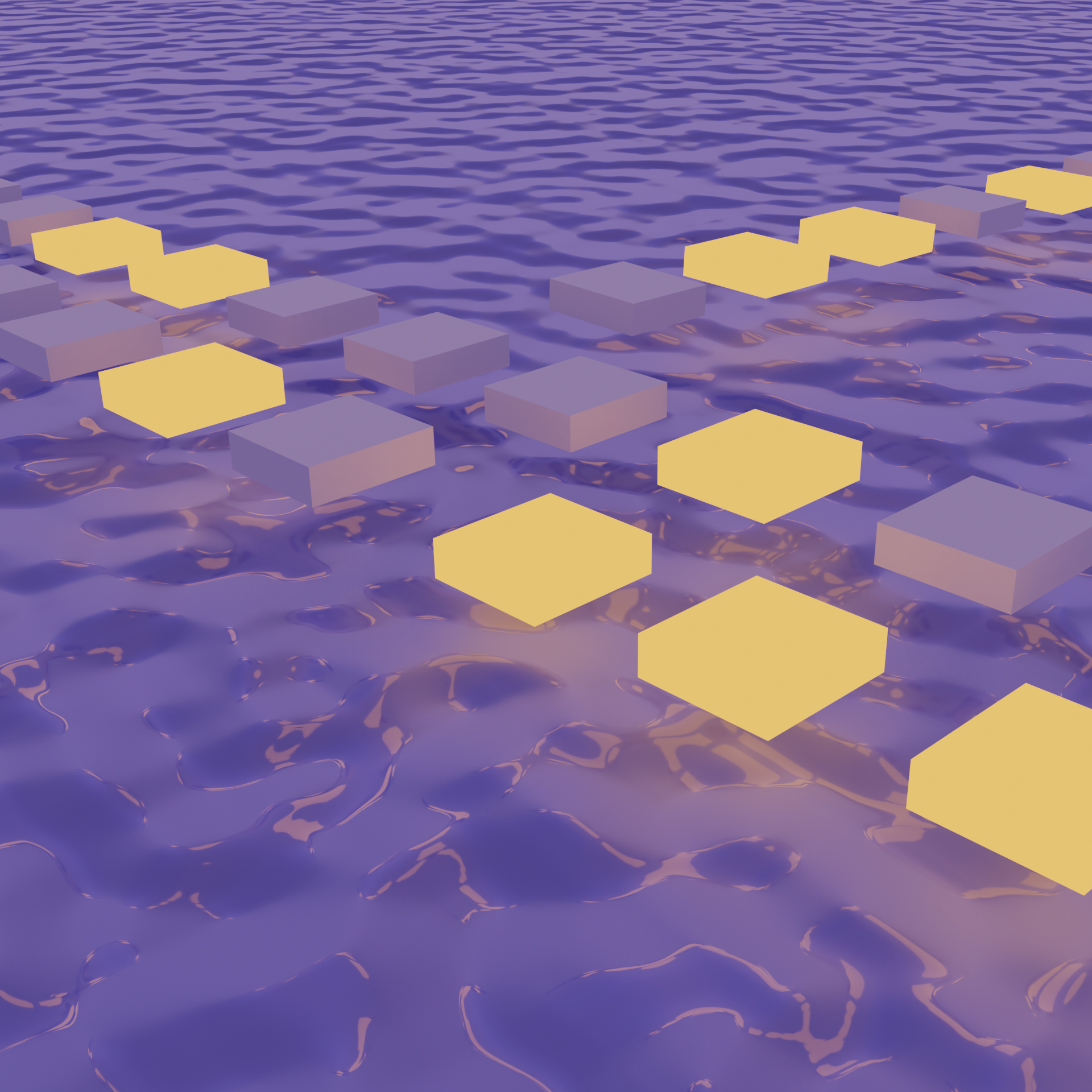

This is what I keyframed to animate my little scene for @vrdreamjournal, a still seen below:

Importantly for future reference: hovering over parameters and hitting i when it changes color will allow you to keyframe that value. A lovely and necessary reminder on how to animate things in Blender.

Things do not quite look as I’d want them to look in the final render, but I am calling it for the purpose of keeping a healthy dose of energy moving through vrdreamjournal – we can keep learning, damnit. (lol it’s the reflections. What is going on.)

I really needed to reset my expectations for how long it takes to render out a decent animation. I had been using a 1080x1080 sized frame for my other instagram posts, but it is very time consuming to render that out at the number samples I wanted (dropped from 128 to 64).

I ended up creating a 5 second video of a 960x960 sized output, and it took 40 minutes(!) to render. I need to keeping figuring out better ways to produce my animations…. And maybe be satisfied with fewer render samples and less noise for when I am editing the scene (the feedback loop was way too slow to be interesting to work with). You will have to follow me on Instagram for the video: @vrdreamjournal.